Podcasts are great, but they have a

discovery problem –

the technology to change that is available.

Today we release a

private beta version

of automatic speech recognition integrated in Auphonic.

UPDATE:

Please read our new and updated blog post

Make Podcasts Searchable (Auphonic Speech To Text Public Beta)

!

Automatic Speech Recognition in Auphonic

Since recently, most automatic speech recognition services were really expensive or the quality was very bad.

Broadcasting corporations spent big money to generate automatic transcripts to search within audio.

That changed, there are a couple of affordable (even free) services available now, which can produce high quality transcripts in multiple languages.

Although these services exist, it is still very complicated to generate meaningful transcripts with timestamps.

That's why we built an Auphonic layer on top of multiple speech recognition systems:

Our classifiers generate automatic metadata during the analysis of an audio signal (music segments, silence, multiple speakers, etc.) to divide the audio file into small and meaningful segments, which are sent to the speech recognition engine afterwards.

If you use our

multitrack algorithms, it is even possible to add speaker names to each transcribed audio segment.

How can i use it?

If you participate in our private beta program, you can connect your Auphonic account to a speech recognition service at the

External Services page.

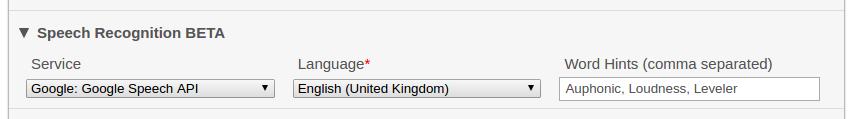

When you create a production or preset, select the speech recognition engine and set its parameters (language, word hints) in the section Speech Recognition BETA (see screenshot above).

Auphonic will create short audio segments from your production and sends them to the speech recognition engine. The results will be combined with our classifier decisions and generate an SRT output file (other file formats will follow).

At the moment we support the following two speech recognition services:

Wit.ai and the

Google Cloud Speech API.

We will add additional services if they can provide better quality for some languages, lower price or faster recognition.

- Wit.ai provides an online natural language processing platform, which also includes speech recognition.

- Wit is free, including for commercial use, and supports many languages. See FAQ and Terms.

- It is possible to correct transcriptions in the Wit.ai online interface (go to Inbox -> Voice), to get a specialized voice recognition and improved accuracy for your material.

- The fact that Wit is free makes it especially interesting.

- Google Cloud Speech API is the speech to text engine developed by Google and supports over 80 languages.

- 60 minutes of audio per month are free, for more see Pricing.

- It is possible to add Word Hints to improve speech recognition accuracy for specific words and phrases or to add additional words to the vocabulary (see screenshot above). For details see Word and Phrase Hints.

- Google Cloud Speech API is still in Beta, but it already shows a great recognition quality. The possibility to add Word Hints is very useful and the system will improve over time.

Please send Feedback!

We are still at the very beginning of our speech recognition integration and will continue to work on it in the next months.

Please send feedback about the following (and other) topics:

- Which other speech recognition result output file formats do you need?

- Anything we can do so that results can be integrated in podcast (web) players or web pages easily?

- Which services work well for which languages?

- What are common problems?

- Which other speech recognition engines should be integrated?

UPDATE:

Please read our new and updated blog post

Make Podcasts Searchable (Auphonic Speech To Text Public Beta)

!